Snowflake SQL

To run models on Snowflake, you need to create a SQL fabric with a Snowflake connection.

Snowflake is only compatible with models. You cannot run pipelines in a SQL project with a Snowflake connection.

Create a fabric

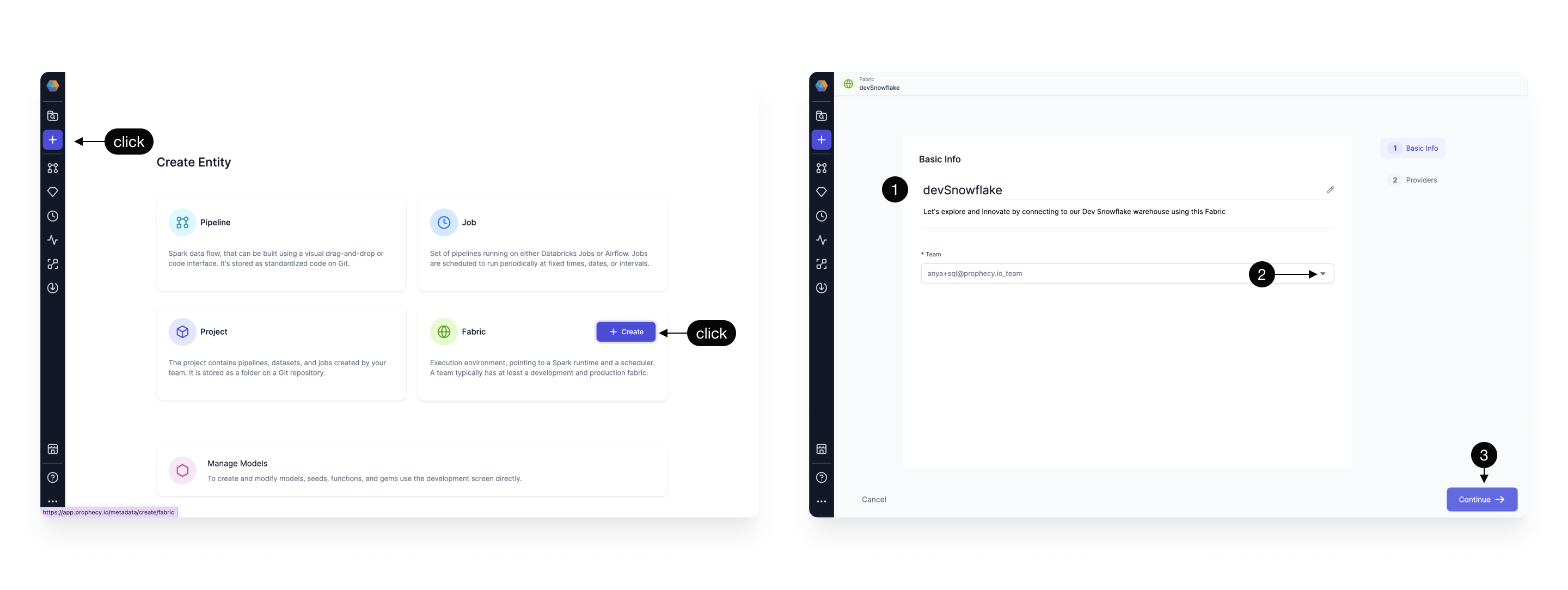

Fabrics define your Prophecy project execution environment. To create a new fabric:

- Click on the Create Entity button from the left navigation bar.

- Click on the Fabric tile.

Basic Info

Next, complete the fields in the Basic Info page.

- Provide a fabric title. It can be helpful to include descriptors like

devorprodin your title. - (Optional) Provide a fabric description.

- Select a team to own this fabric. Open the dropdown to see the teams you belong to.

- Click Continue.

Provider

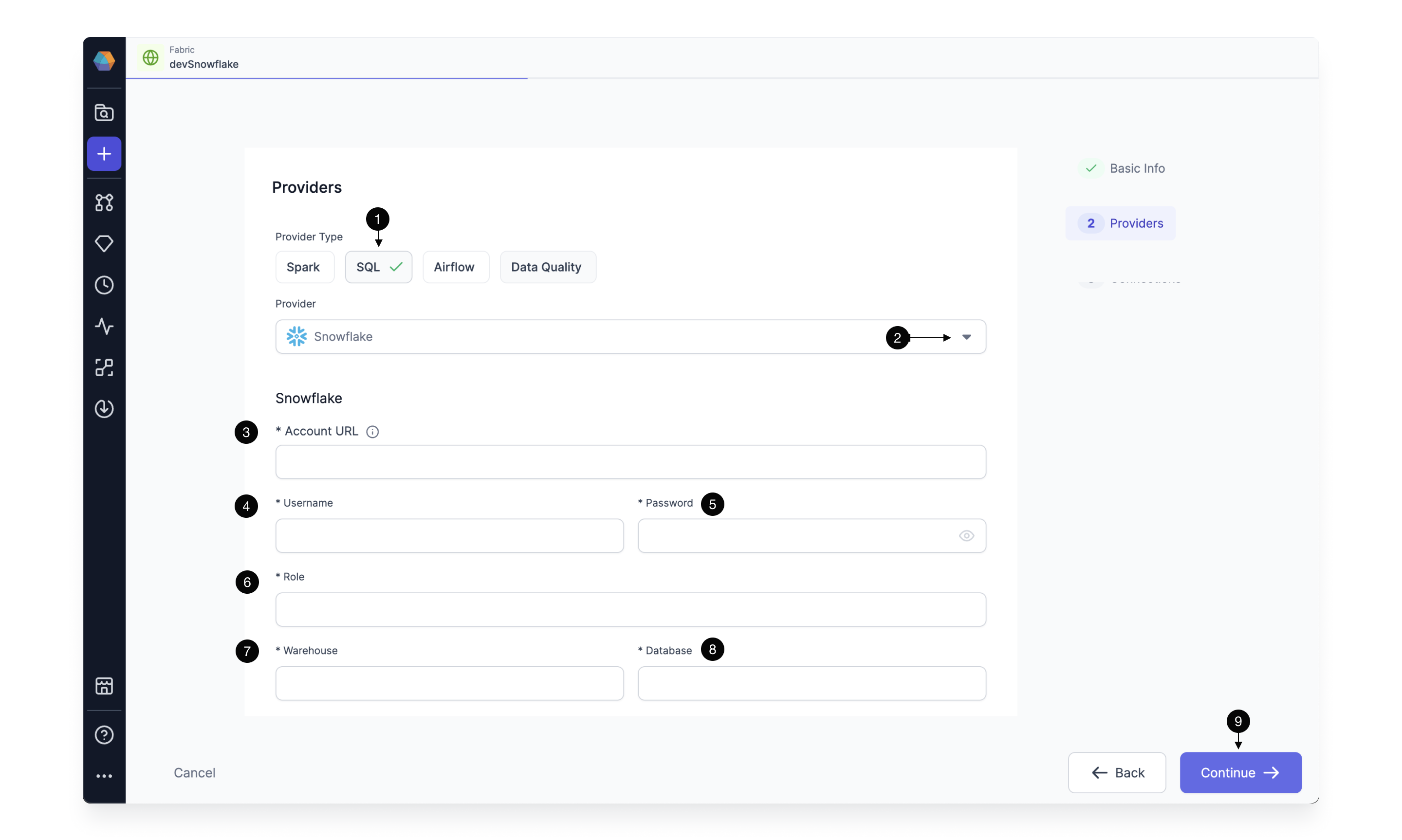

The SQL provider is both the storage warehouse and the execution environment where your SQL code will run. To configure the provider:

- Select SQL as the Provider type.

- Click the dropdown menu for the list of supported Provider types and select Snowflake.

- Add the Snowflake Account URL (for example,

https://<org>-<account>.snowflakecomputing.com). - Add the username that will be used to connect to the Snowflake Warehouse.

- Add the password that will be used to connect to the Snowflake Warehouse.

infoEach Prophecy user will provide their own username/password credential upon login. Prophecy respects the access scope of the Snowflake credentials, meaning users in Prophecy can read tables from each database and schema for which they have access in Snowflake. These username/password credentials are encrypted for secure storage.

- Add the Snowflake role that Prophecy will use to read data and execute queries on the Snowflake Warehouse. The role must be already granted to the username/password provided above.

- Specify the Snowflake warehouse for default writes for this execution environment.

- Specify the desired Snowflake database and schema where tables will be written to by default.

- Click Continue to complete fabric setup.

What's next

Now that you are familiar with setting up Snowflake fabrics, attach a fabric to your SQL project and begin data modeling.