Metadata connections

When you create a metadata connection in a fabric, Prophecy can connect to the data provider (like Databricks) and cache metadata on a regular basis. If you have thousands of objects, such as tables or views, this continuous sync can make fetching objects much faster in Prophecy—particularly in the Environment tab of the Project Editor.

Different from metadata connections, Prophecy also supports Airflow connections which perform a similar function for Airflow jobs.

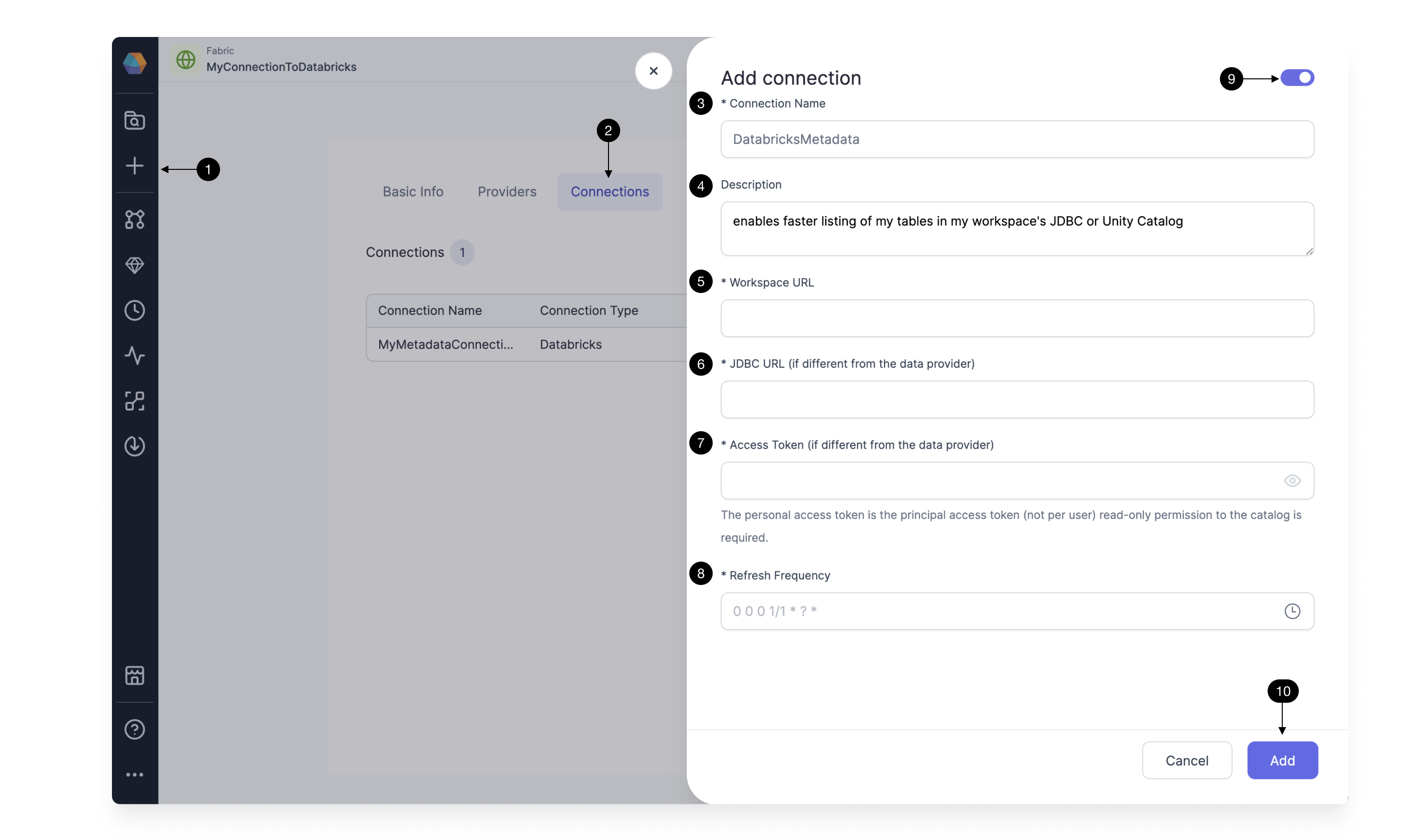

Metadata connection setup

Metadata connections are set up inside individual fabrics. This means that anyone with access to the fabric can take advantage of the metadata connection.

- Initiate the creation of a new fabric.

- In the Connections tab, add a new connection.

- Define a Connection Name.

- Give the connection a Description (optional).

- Add the Workspace URL for the connection. For example, this could be a Databricks workspace URL. In this case, the connection would sync all accessible Data Catalogs.

- If you choose to define a JDBC URL different than that defined in the fabric, the Metadata Connection JDBC will be the only JDBC included in the syncing.

- Add the Access Token for the connection (if different from the fabric). More information on access tokens can be found below.

- Fill in the Refresh Frequency at which you want Prophecy to sync the metadata.

- Enable the metadata connection.

- Add the metadata connection to save it.

While you can create multiple metadata connections per fabric, only one connection can be enabled at a time.

Metadata connection usage

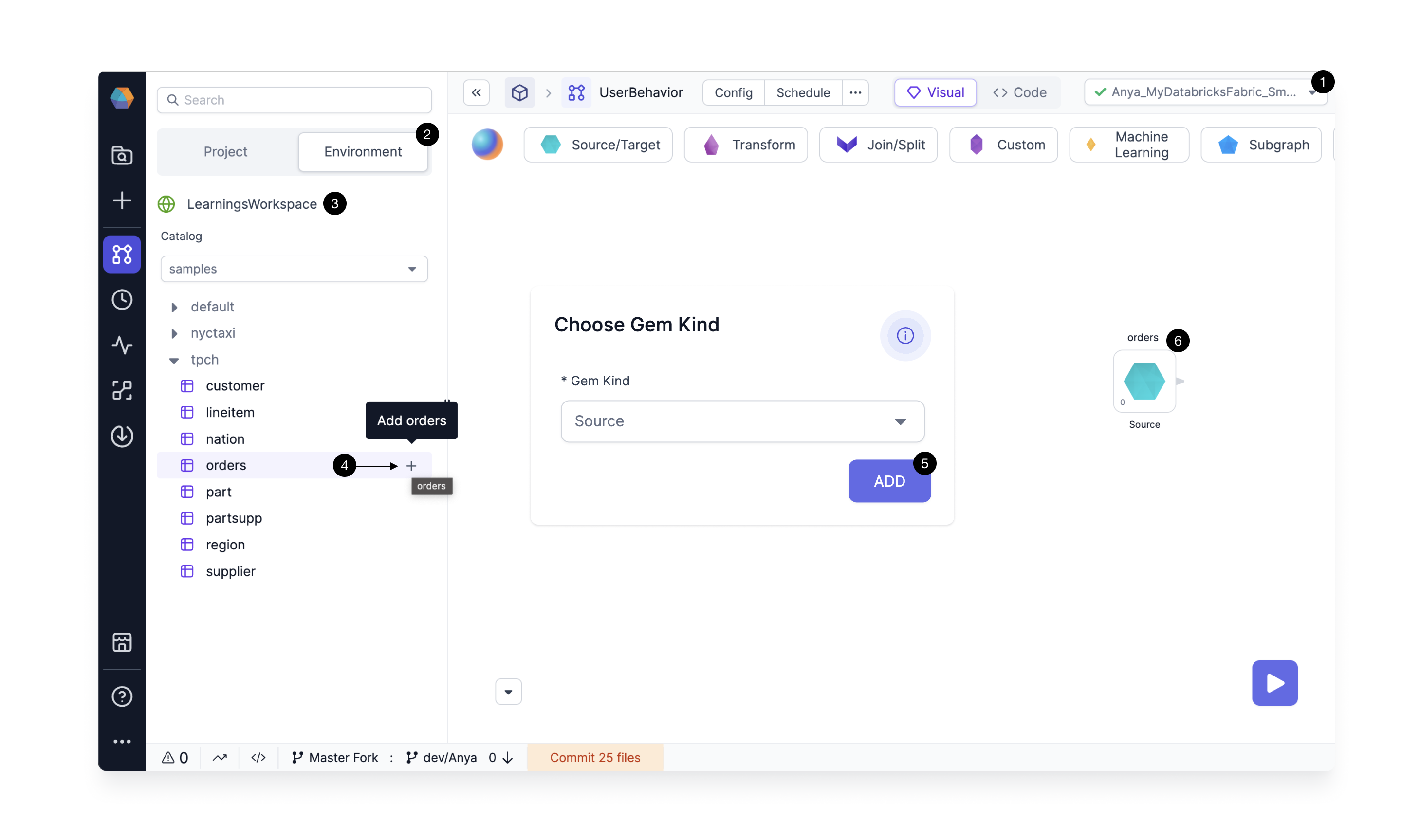

To use a metadata connection in a project:

- Attach to a fabric that has a metadata connection.

- Open the Environment tab to access the data.

- Note the workspace that is synced at the frequency defined in the metadata connection.

- Add a table to the canvas as a dataset.

- Define whether your dataset should be added as a Source or Target gem.

- Now the dataset appears on the canvas, ready for transformations.

Execution environment requirements

SQL warehouses or clusters

Prophecy supports metadata collection through SQL warehouses and clusters on Databricks and Snowflake. In general, we recommend creating a SQL warehouse or cluster dedicated to the metadata connection. Using this approach, the recurring metadata syncs can have a cluster defined with appropriate resources, whereas execution clusters defined in the fabric would have a separate resource profile.

Provider tokens

Prophecy metadata connections use APIs to make calls to your data provider accounts. For this access, a service principal is recommended. For Databricks providers, follow these instructions to create a service principal and associated token. If you are unable to set up a service principal, use a standard Personal Access Token.

Permissions

Regardless of the type of credentials you use, those credentials will need to come with access on the environment side to all workspaces, warehouses, or clusters you want to see with the metadata connection.

To grant permissions to the service principal on the Unity Catalog (granting the same permission on all schemas within that catalog), run the command:

GRANT SELECT ON CATALOG <catalog> TO <service_principal>;

To grant permissions to the service principal for Hive Metastore, run the command:

GRANT USAGE, READ_METADATA, SELECT ON CATALOG <hive_metastore> TO <service_principal>;