December 2024

3.4.2.* (December 13, 2024)

- Prophecy Python libs version: 1.9.28

- Prophecy Scala libs version: 8.6.0

Features

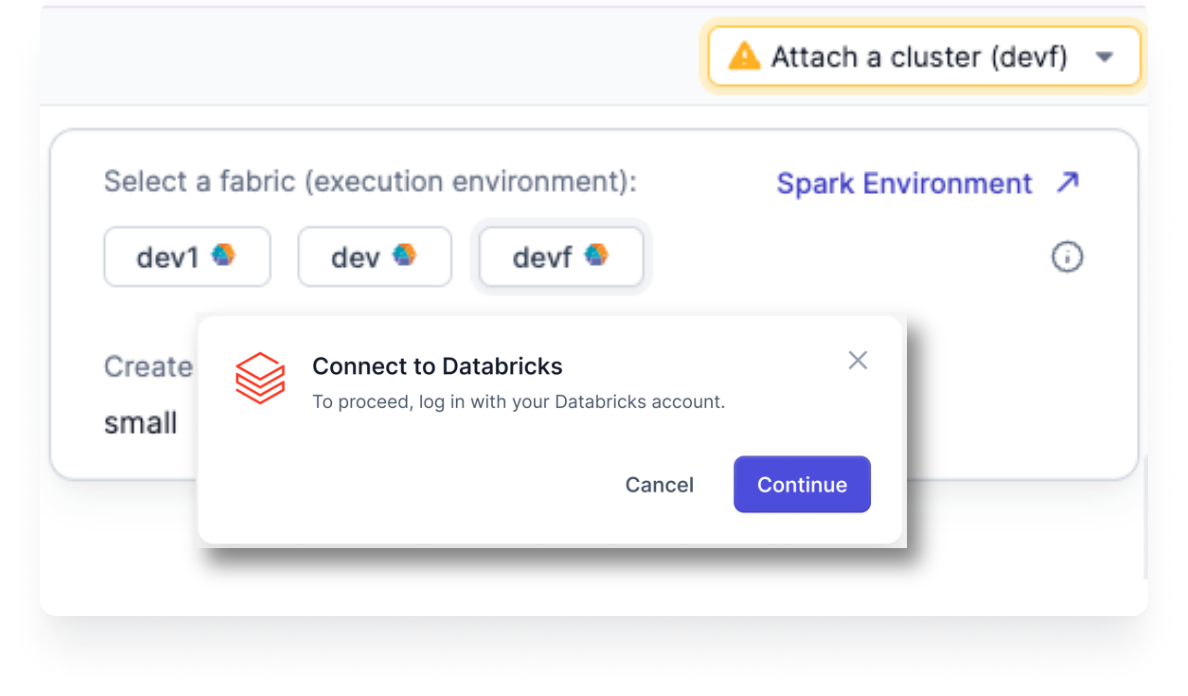

Databricks OAuth integration

Prophecy has integrated with Databricks OAuth to provide you with increased security via industry-standard authentication flows. When enabled, you will see a login overlay in Prophecy, such as when selecting a fabric, where Databricks API interactions are required.

To enable this feature, Databricks account admins need to create an app connection in Databricks, and Prophecy cluster admins need to input this information in Prophecy. View Databricks OAuth to review more specific steps.

Minor Improvements

-

"Offset" column name bug fix: We fixed a bug where naming a column using a Snowflake reserved keyword, such as "Offset", would break when using a Reformat gem.

-

Support for null values in unit tests: When upgrading the Scala

prophecy-libsversion to 8.6.0 or later and the Pythonprophecy-libsversion to 1.9.27 or later, you may notice differences in the Prophecy-managed files related to unit tests. Specifically, changes might occur in theprophecy/tests/*.jsonfiles. Any null values in these files will be replaced with empty strings (""). This change does not affect existing unit tests, and they will continue to function as before. These files are managed by Prophecy and are not used during pipeline execution.There is a new option under the three dots of the unit test data table to Set value as Null in columns.

-

New Spark fabric diagnostic error codes: There are new diagnostic error codes for the following failures:

- Unable to reach Databricks endpoint.

- Unable to write execution metrics because Hive Metastore is not enabled on your Spark.

- Authentication fails while attempting to test a Spark fabric connection.

For more information, see Diagnostics.